Buy any Easy Native Extensions 2nd Edition package and get our $99 iOS + Android ANE Template completely free before the end of June 2015.

- step-by-step guide to making your iOS extension in under an hour

- library for data conversion between ActionScript and native code

- tutorials

- infographics

- code included

At the end of this part you will have

An ANE that grabs frames from the native camera.

Time

15-20 minutes

Wait, have you done these first?

- Part 1: Create a test app – 15-20 minutes

- Part 2: Set up the Xcode project – 8-10 minutes

- Part 3: Set up the AIR Library – 8-10 minutes

- Part 4: Connect to the camera in Objective-C – 15-20 minutes

- Part 5: Start the camera from ActionScript – 5-6 minutes

You should have an Xcode iOS library project that looks roughly like this:

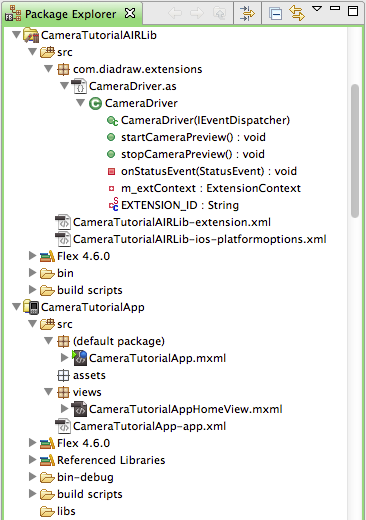

and a test app and Flex Library project that look like this:

If you aren’t interested in completing the full tutorial, but want to see frame capturing with AVFondation, you can stick around – this post should be quite informative.

Step 1: Hook to the video frame queue in the native library

Video frames from the camera arrive on the callback you added in Part 4 of the tutorial to your CameraDelegate class in CameraDelegate.m:

|

1 2 3 4 5 6 7 8 9 10 11 |

- ( void ) captureOutput: ( AVCaptureOutput * ) captureOutput didOutputSampleBuffer: ( CMSampleBufferRef ) sampleBuffer fromConnection: ( AVCaptureConnection * ) connection { // 1. Check if this is the output we are expecting: if ( captureOutput == m_videoOutput ) { // 2. If it's a video frame, copy it from the sample buffer: [ self copyVideoFrame: sampleBuffer ]; } } |

captureOutput:didOutputSampleBuffer:fromConnection: is called every time there is a new frame available. Note that this call happens on a special queue, same one that you set in Part 4.

Step 2: Inspect data that comes form the camera

Have a look at captureOtput again – it takes three arguments:

- AVCaptureOutput * captureOutput – this is the data output you set up in Part 4 of the tutorial:

|

1 |

AVCaptureVideoDataOutput * m_videoOutput; // For the video frame data from the camera |

- AVCaptureConnection * connection allows you access to the device that data is coming from – in this case the camera.

- CMSampleBufferRef sampleBuffer is what we are most interested in right now: this sample buffer wraps the video frame pixels we want to copy and send to ActionScript. Depending on which output sampleBuffer comes from, it can contain an image or an audio frame. To get hold of an image frame, which is what we’re expecting here, you’ll query sampleBuffer for a reference to an image buffer and from the image buffer you’ll copy the raw pixels as bytes (done in Step 4 below).

Step 3: Add a way of storing copied frames

As we saw in Step 2, you’ll be copying bytes and storing them for ActionScript to access. There are a couple of things we need to consider:

- data structure: you’ll need something that you can easily allocate, copy bytes to and from. NSData and NSMutableData seem like good candidates.

- concurrency: we noted in Step 1 that data will be arriving on a thread of its own. ActionScript on the other hand only operates on the main thread. So chances are that new data will be arriving from the camera while ActionScript is still reading the previous frame’s data. One way of making sure that these two operations don’t step on each other’s toes is to keep copies of old frames for ActionScript to consume, while copying the new frames into a fresh bit of memory. On a mobile device you are starved for RAM and the system will shut your app down it becomes too greedy for memory, so you’d better not keep too many old frames around. In this tutorial we’ll use a technique, which I refer to as fake triple buffering. It only keeps one old frame in your native library and does minimal synchronization between the main thread and the camera thread so as not to block either of them for any length of time.

3.1. Declare your three ‘fake’ buffers as private members to CameraDelegate (find the @private directive in CameraDelegate.m):

|

1 2 3 |

NSData * m_cameraWriteBuffer; // Pixels, captured from the camera, are copied here NSData * m_middleManBuffer; // Pixels will be read from here NSData * m_consumerReadBuffer; // Pixels are copied from this buffer into the ActionScript buffer |

3.2. Make sure they are initialized. Find CameraDelegate‘s init() function which you added in Step 10 of Part 4 of the tutorial and add the initialization of the three buffers to it:

|

1 2 3 4 5 6 7 8 9 10 11 |

- ( id ) init { ... // 2. Initialize members: ... m_cameraWriteBuffer = NULL; m_middleManBuffer = NULL; m_consumerReadBuffer = NULL; ... } |

Step 4: Access pixels in the sample buffer

Now that you’ve seen where video frames come and in what shape, let’s get the actual frame pixels out of one of these.

Open CameraDelegate.m and find the placeholder function you added to it just for this purpose:

|

1 2 3 4 |

- ( void ) copyVideoFrame: ( CMSampleBufferRef ) sampleBuffer { // TODO: To be implemented in the next part of this tutorial } |

Get rid of the //TODO: remark and add the following.

4.1. Get hold of the pixel buffer.

|

1 2 |

// 1. Get a pointer to the pixel buffer: CVPixelBufferRef pixelBuffer = ( CVPixelBufferRef ) CMSampleBufferGetImageBuffer( sampleBuffer ); |

CVPixelBufferRef will give you information about the video frame and access to its data.

4.2. Lock the pixel buffer for reading. You’ll have to unlock it when you’re done with it (see step 4.7. below).

|

1 2 3 4 |

// 2. Obtain access to the pixel buffer by locking its base address: CVOptionFlags lockFlags = 0; // If you are curious, look up the definition of CVOptionFlags CVReturn status = CVPixelBufferLockBaseAddress( pixelBuffer, lockFlags ); assert( kCVReturnSuccess == status ); |

4.3. Prepare to copy bytes from the pixel buffer: check how many there are and where they are in memory.

|

1 2 3 4 5 6 7 8 |

// 3. Copy bytes from the pixel buffer // 3.1. First, work out how many bytes we need to copy: size_t bytesPerRow = CVPixelBufferGetBytesPerRow( pixelBuffer ); size_t height = CVPixelBufferGetHeight( pixelBuffer ); NSUInteger numBytesToCopy = bytesPerRow * height; // 3.2. Then work out where in memory we'll need to start copying: void * startByte = CVPixelBufferGetBaseAddress( pixelBuffer ); |

4.4. Copy pixels into a new block of memory:

|

1 2 |

// 4. Allocate memory for copying the pixels and copy them: m_cameraWriteBuffer = [ NSData dataWithBytes: startByte length: numBytesToCopy ]; |

If you are wondering why we allocate a new block of memory each time, have a look at fake triple buffering.

4.5. Move the copied frame along, so it can be accessed by ActionScript:

|

1 2 3 4 5 6 7 8 9 10 11 |

// 5. Make the copied bytes available for 'consuming' by ActionScript: // 5.1. Block access to the two buffer pointers: @synchronized( self ) { // 5.2. 'Swap' the buffer pointers: m_middleManBuffer = m_cameraWriteBuffer; m_cameraWriteBuffer = NULL; m_frameWidth = CVPixelBufferGetWidth( pixelBuffer ); m_frameHeight = height; } |

Here the @synchronized directive creates a mutex lock that ensures that no other thread will be able to access m_middleManBuffer while we are changing it.

4.6. Store the video frame size

Notice the two variables I snuck in the last code block: m_frameWidth and m_frameHeight? These will let you know how big each frame is, so you can tell ActionScript later. We aren’t expecting the size to differ from frame to frame in this tutorial, but you can run into that if you decide to do any processing on the frames, cropping for example.

So declare these as private members (under the @private directive):

|

1 2 |

int m_frameWidth; int m_frameHeight; |

And make sure they get initialized when the camera starts. Add these two lines to your startCamera() function, just before you call [ m_captureSession startRunning ]:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

- ( BOOL ) startCamera { ... // 8. Initialize the frame size: m_frameWidth = 0; m_frameHeight = 0; // 9. 3, 2, 1, 0... Start! [ m_captureSession startRunning ]; ... } |

4.7. Almost forgot!

Let’s unlock that poor pixel buffer:

|

1 2 3 |

// 6. Let go of the access to the pixel buffer by unlocking the base address: CVOptionFlags unlockFlags = 0; // If you are curious, look up the definition of CVOptionFlags CVPixelBufferUnlockBaseAddress( pixelBuffer, unlockFlags ); |

And that’s it, you have secured a frame from the camera.

What’s next?

- If you are still wondering what that ‘fake triple buffering’ is all about, check it out before moving on. Like the cat selfie?

- Next, though, it’s time to see those frames on the screen, what do you think? This is the objective of Part 7: Pass video frames to ActionScript (15-20 minutes).

- Here is the table of contents for the tutorial, in case you want to jump back or ahead.

Check out the DiaDraw Camera Driver ANE.

I want read data from delegate method and put it in to buffer to read from a buffer , this buffer should have capability crop video from two time based location , how can i do it ?

Hi ganidu,

To record video you can use AVAssetWriter and add frames to it as they arrive. You can then save only part of the resulting video between second A and second B. Our Screen Recorder Native Extension + Source Code uses this technique for video composition if you want to use it as a starting point.